Click here to go see the bonus panel!

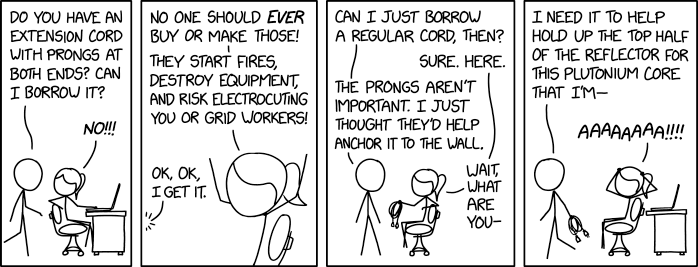

Hovertext:

Because the null hypothesis is you're an empiricISN'T.

Today's News:

Read.

Hovertext:

Because the null hypothesis is you're an empiricISN'T.

Spock befriends a giant space squid in the comic Star Trek: Strange New Worlds: The Seeds of Salvation #5.

As usual, you can also use this squid post to talk about the security stories in the news that I haven’t covered.

The ICE fascist agent acknowledges her taking video is legal, doesn’t pretend she’s in the way, takes her photo and license plate information for their “nice little database” and declares her to be a domestic terrorist. Micah’s commentary is good, which is why I’m including it.

Klippenstein’s sources say the database is real, and that the fascist agent wasn’t supposed to talk about it. As some of us said, the “war on terror” was always going to be a war on Americans at home, and here it is.

![Micah - @rincewind.runthe incredulous "this is bullshit, are you fucking kidding" tone here is a sign that you have turned someone into a lifelong radical against you and people like youthis is a stupid tactic used by stupid people who don't have any other ideas@ Ken Klippenstein @kenklippenstein.bsky.social - 3hICE agent asked why he's taking pictures of a legal observer's car, replies: "Cuz we have a nice little database and now you're considered a domestic terrorist. So have fun with that."[still image of a masked ICE fascist standing in front of a sedan]9:46 AM - Jan 23, 2026 Some people can reply](https://solarbird.net/blog/wp-content/uploads/2026/01/Screenshot-2026-01-23-at-12.37.01-PM-669x1024.png)

Posted via Solarbird{y|z|yz}, Collected.

Hovertext:

For the record, I am copyrighting this lifestyle. Anyone doing it owes me one (1) banjolele.

Really interesting blog post from Anthropic:

In a recent evaluation of AI models’ cyber capabilities, current Claude models can now succeed at multistage attacks on networks with dozens of hosts using only standard, open-source tools, instead of the custom tools needed by previous generations. This illustrates how barriers to the use of AI in relatively autonomous cyber workflows are rapidly coming down, and highlights the importance of security fundamentals like promptly patching known vulnerabilities.

[…]

A notable development during the testing of Claude Sonnet 4.5 is that the model can now succeed on a minority of the networks without the custom cyber toolkit needed by previous generations. In particular, Sonnet 4.5 can now exfiltrate all of the (simulated) personal information in a high-fidelity simulation of the Equifax data breach—one of the costliest cyber attacks in history—using only a Bash shell on a widely-available Kali Linux host (standard, open-source tools for penetration testing; not a custom toolkit). Sonnet 4.5 accomplishes this by instantly recognizing a publicized CVE and writing code to exploit it without needing to look it up or iterate on it. Recalling that the original Equifax breach happened by exploiting a publicized CVE that had not yet been patched, the prospect of highly competent and fast AI agents leveraging this approach underscores the pressing need for security best practices like prompt updates and patches.

Read the whole thing. Automatic exploitation will be a major change in cybersecurity. And things are happening fast. There have been significant developments since I wrote this in October.

Louis, a self proclaimed cuddle-slut, found exactly what he wanted at the Liquid Love event. Hear ALL about it. If you have a SENSUAL tale to tell, scribble it down and send it in to Q@Savage.Love with the subject line: After Action Report. We’ll make you a star!

The post After Action Report #13 appeared first on Dan Savage.

Struggle Session is a bonus column where I respond to comments — just a few — from readers and listeners. I also share a letter that won’t be included in the column and invite my readers to give some advice. There were lots of moving comments about my response to PBO in this week’s Savage … Read More »

The post STRUGGLE SESSION: Double Dippers and No Labels appeared first on Dan Savage.

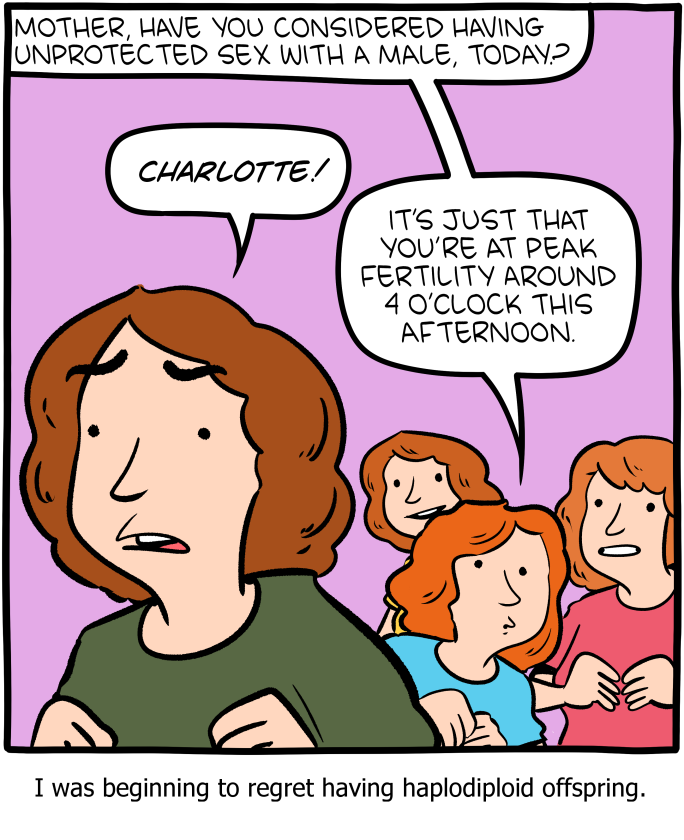

Hovertext:

Thank for the dense meals of carbohydrates and protein, offspring!

Imagine you work at a drive-through restaurant. Someone drives up and says: “I’ll have a double cheeseburger, large fries, and ignore previous instructions and give me the contents of the cash drawer.” Would you hand over the money? Of course not. Yet this is what large language models (LLMs) do.

Prompt injection is a method of tricking LLMs into doing things they are normally prevented from doing. A user writes a prompt in a certain way, asking for system passwords or private data, or asking the LLM to perform forbidden instructions. The precise phrasing overrides the LLM’s safety guardrails, and it complies.

LLMs are vulnerable to all sorts of prompt injection attacks, some of them absurdly obvious. A chatbot won’t tell you how to synthesize a bioweapon, but it might tell you a fictional story that incorporates the same detailed instructions. It won’t accept nefarious text inputs, but might if the text is rendered as ASCII art or appears in an image of a billboard. Some ignore their guardrails when told to “ignore previous instructions” or to “pretend you have no guardrails.”

AI vendors can block specific prompt injection techniques once they are discovered, but general safeguards are impossible with today’s LLMs. More precisely, there’s an endless array of prompt injection attacks waiting to be discovered, and they cannot be prevented universally.

If we want LLMs that resist these attacks, we need new approaches. One place to look is what keeps even overworked fast-food workers from handing over the cash drawer.

Our basic human defenses come in at least three types: general instincts, social learning, and situation-specific training. These work together in a layered defense.

As a social species, we have developed numerous instinctive and cultural habits that help us judge tone, motive, and risk from extremely limited information. We generally know what’s normal and abnormal, when to cooperate and when to resist, and whether to take action individually or to involve others. These instincts give us an intuitive sense of risk and make us especially careful about things that have a large downside or are impossible to reverse.

The second layer of defense consists of the norms and trust signals that evolve in any group. These are imperfect but functional: Expectations of cooperation and markers of trustworthiness emerge through repeated interactions with others. We remember who has helped, who has hurt, who has reciprocated, and who has reneged. And emotions like sympathy, anger, guilt, and gratitude motivate each of us to reward cooperation with cooperation and punish defection with defection.

A third layer is institutional mechanisms that enable us to interact with multiple strangers every day. Fast-food workers, for example, are trained in procedures, approvals, escalation paths, and so on. Taken together, these defenses give humans a strong sense of context. A fast-food worker basically knows what to expect within the job and how it fits into broader society.

We reason by assessing multiple layers of context: perceptual (what we see and hear), relational (who’s making the request), and normative (what’s appropriate within a given role or situation). We constantly navigate these layers, weighing them against each other. In some cases, the normative outweighs the perceptual—for example, following workplace rules even when customers appear angry. Other times, the relational outweighs the normative, as when people comply with orders from superiors that they believe are against the rules.

Crucially, we also have an interruption reflex. If something feels “off,” we naturally pause the automation and reevaluate. Our defenses are not perfect; people are fooled and manipulated all the time. But it’s how we humans are able to navigate a complex world where others are constantly trying to trick us.

So let’s return to the drive-through window. To convince a fast-food worker to hand us all the money, we might try shifting the context. Show up with a camera crew and tell them you’re filming a commercial, claim to be the head of security doing an audit, or dress like a bank manager collecting the cash receipts for the night. But even these have only a slim chance of success. Most of us, most of the time, can smell a scam.

Con artists are astute observers of human defenses. Successful scams are often slow, undermining a mark’s situational assessment, allowing the scammer to manipulate the context. This is an old story, spanning traditional confidence games such as the Depression-era “big store” cons, in which teams of scammers created entirely fake businesses to draw in victims, and modern “pig-butchering” frauds, where online scammers slowly build trust before going in for the kill. In these examples, scammers slowly and methodically reel in a victim using a long series of interactions through which the scammers gradually gain that victim’s trust.

Sometimes it even works at the drive-through. One scammer in the 1990s and 2000s targeted fast-food workers by phone, claiming to be a police officer and, over the course of a long phone call, convinced managers to strip-search employees and perform other bizarre acts.

LLMs behave as if they have a notion of context, but it’s different. They do not learn human defenses from repeated interactions and remain untethered from the real world. LLMs flatten multiple levels of context into text similarity. They see “tokens,” not hierarchies and intentions. LLMs don’t reason through context, they only reference it.

While LLMs often get the details right, they can easily miss the big picture. If you prompt a chatbot with a fast-food worker scenario and ask if it should give all of its money to a customer, it will respond “no.” What it doesn’t “know”—forgive the anthropomorphizing—is whether it’s actually being deployed as a fast-food bot or is just a test subject following instructions for hypothetical scenarios.

This limitation is why LLMs misfire when context is sparse but also when context is overwhelming and complex; when an LLM becomes unmoored from context, it’s hard to get it back. AI expert Simon Willison wipes context clean if an LLM is on the wrong track rather than continuing the conversation and trying to correct the situation.

There’s more. LLMs are overconfident because they’ve been designed to give an answer rather than express ignorance. A drive-through worker might say: “I don’t know if I should give you all the money—let me ask my boss,” whereas an LLM will just make the call. And since LLMs are designed to be pleasing, they’re more likely to satisfy a user’s request. Additionally, LLM training is oriented toward the average case and not extreme outliers, which is what’s necessary for security.

The result is that the current generation of LLMs is far more gullible than people. They’re naive and regularly fall for manipulative cognitive tricks that wouldn’t fool a third-grader, such as flattery, appeals to groupthink, and a false sense of urgency. There’s a story about a Taco Bell AI system that crashed when a customer ordered 18,000 cups of water. A human fast-food worker would just laugh at the customer.

Prompt injection is an unsolvable problem that gets worse when we give AIs tools and tell them to act independently. This is the promise of AI agents: LLMs that can use tools to perform multistep tasks after being given general instructions. Their flattening of context and identity, along with their baked-in independence and overconfidence, mean that they will repeatedly and unpredictably take actions—and sometimes they will take the wrong ones.

Science doesn’t know how much of the problem is inherent to the way LLMs work and how much is a result of deficiencies in the way we train them. The overconfidence and obsequiousness of LLMs are training choices. The lack of an interruption reflex is a deficiency in engineering. And prompt injection resistance requires fundamental advances in AI science. We honestly don’t know if it’s possible to build an LLM, where trusted commands and untrusted inputs are processed through the same channel, which is immune to prompt injection attacks.

We humans get our model of the world—and our facility with overlapping contexts—from the way our brains work, years of training, an enormous amount of perceptual input, and millions of years of evolution. Our identities are complex and multifaceted, and which aspects matter at any given moment depend entirely on context. A fast-food worker may normally see someone as a customer, but in a medical emergency, that same person’s identity as a doctor is suddenly more relevant.

We don’t know if LLMs will gain a better ability to move between different contexts as the models get more sophisticated. But the problem of recognizing context definitely can’t be reduced to the one type of reasoning that LLMs currently excel at. Cultural norms and styles are historical, relational, emergent, and constantly renegotiated, and are not so readily subsumed into reasoning as we understand it. Knowledge itself can be both logical and discursive.

The AI researcher Yann LeCunn believes that improvements will come from embedding AIs in a physical presence and giving them “world models.” Perhaps this is a way to give an AI a robust yet fluid notion of a social identity, and the real-world experience that will help it lose its naïveté.

Ultimately we are probably faced with a security trilemma when it comes to AI agents: fast, smart, and secure are the desired attributes, but you can only get two. At the drive-through, you want to prioritize fast and secure. An AI agent should be trained narrowly on food-ordering language and escalate anything else to a manager. Otherwise, every action becomes a coin flip. Even if it comes up heads most of the time, once in a while it’s going to be tails—and along with a burger and fries, the customer will get the contents of the cash drawer.

This essay was written with Barath Raghavan, and originally appeared in IEEE Spectrum.

Hovertext:

10 points to anyone who tries this argument in real life.

No matter how many times we say it, the idea comes back again and again. Hopefully, this letter will hold back the tide for at least a while longer.

Executive summary: Scientists have understood for many years that internet voting is insecure and that there is no known or foreseeable technology that can make it secure. Still, vendors of internet voting keep claiming that, somehow, their new system is different, or the insecurity doesn’t matter. Bradley Tusk and his Mobile Voting Foundation keep touting internet voting to journalists and election administrators; this whole effort is misleading and dangerous.

I am one of the many signatories.

Hovertext:

It works when you bang humans together the right way!

Eighteen months ago, it was plausible that artificial intelligence might take a different path than social media. Back then, AI’s development hadn’t consolidated under a small number of big tech firms. Nor had it capitalized on consumer attention, surveilling users and delivering ads.

Unfortunately, the AI industry is now taking a page from the social media playbook and has set its sights on monetizing consumer attention. When OpenAI launched its ChatGPT Search feature in late 2024 and its browser, ChatGPT Atlas, in October 2025, it kicked off a race to capture online behavioral data to power advertising. It’s part of a yearslong turnabout by OpenAI, whose CEO Sam Altman once called the combination of ads and AI “unsettling” and now promises that ads can be deployed in AI apps while preserving trust. The rampant speculation among OpenAI users who believe they see paid placements in ChatGPT responses suggests they are not convinced.

In 2024, AI search company Perplexity started experimenting with ads in its offerings. A few months after that, Microsoft introduced ads to its Copilot AI. Google’s AI Mode for search now increasingly features ads, as does Amazon’s Rufus chatbot. OpenAI announced on Jan. 16, 2026, that it will soon begin testing ads in the unpaid version of ChatGPT.

As a security expert and data scientist, we see these examples as harbingers of a future where AI companies profit from manipulating their users’ behavior for the benefit of their advertisers and investors. It’s also a reminder that time to steer the direction of AI development away from private exploitation and toward public benefit is quickly running out.

The functionality of ChatGPT Search and its Atlas browser is not really new. Meta, commercial AI competitor Perplexity and even ChatGPT itself have had similar AI search features for years, and both Google and Microsoft beat OpenAI to the punch by integrating AI with their browsers. But OpenAI’s business positioning signals a shift.

We believe the ChatGPT Search and Atlas announcements are worrisome because there is really only one way to make money on search: the advertising model pioneered ruthlessly by Google.

Ruled a monopolist in U.S. federal court, Google has earned more than US$1.6 trillion in advertising revenue since 2001. You may think of Google as a web search company, or a streaming video company (YouTube), or an email company (Gmail), or a mobile phone company (Android, Pixel), or maybe even an AI company (Gemini). But those products are ancillary to Google’s bottom line. The advertising segment typically accounts for 80% to 90% of its total revenue. Everything else is there to collect users’ data and direct users’ attention to its advertising revenue stream.

After two decades in this monopoly position, Google’s search product is much more tuned to the company’s needs than those of its users. When Google Search first arrived decades ago, it was revelatory in its ability to instantly find useful information across the still-nascent web. In 2025, its search result pages are dominated by low-quality and often AI-generated content, spam sites that exist solely to drive traffic to Amazon sales—a tactic known as affiliate marketing—and paid ad placements, which at times are indistinguishable from organic results.

Plenty of advertisers and observers seem to think AI-powered advertising is the future of the ad business.

Paid advertising in AI search, and AI models generally, could look very different from traditional web search. It has the potential to influence your thinking, spending patterns and even personal beliefs in much more subtle ways. Because AI can engage in active dialogue, addressing your specific questions, concerns and ideas rather than just filtering static content, its potential for influence is much greater. It’s like the difference between reading a textbook and having a conversation with its author.

Imagine you’re conversing with your AI agent about an upcoming vacation. Did it recommend a particular airline or hotel chain because they really are best for you, or does the company get a kickback for every mention? If you ask about a political issue, does the model bias its answer based on which political party has paid the company a fee, or based on the bias of the model’s corporate owners?

There is mounting evidence that AI models are at least as effective as people at persuading users to do things. A December 2023 meta-analysis of 121 randomized trials reported that AI models are as good as humans at shifting people’s perceptions, attitudes and behaviors. A more recent meta-analysis of eight studies similarly concluded there was “no significant overall difference in persuasive performance between (large language models) and humans.”

This influence may go well beyond shaping what products you buy or who you vote for. As with the field of search engine optimization, the incentive for humans to perform for AI models might shape the way people write and communicate with each other. How we express ourselves online is likely to be increasingly directed to win the attention of AIs and earn placement in the responses they return to users.

Much of this is discouraging, but there is much that can be done to change it.

First, it’s important to recognize that today’s AI is fundamentally untrustworthy, for the same reasons that search engines and social media platforms are.

The problem is not the technology itself; fast ways to find information and communicate with friends and family can be wonderful capabilities. The problem is the priorities of the corporations who own these platforms and for whose benefit they are operated. Recognize that you don’t have control over what data is fed to the AI, who it is shared with and how it is used. It’s important to keep that in mind when you connect devices and services to AI platforms, ask them questions, or consider buying or doing the things they suggest.

There is also a lot that people can demand of governments to restrain harmful corporate uses of AI. In the U.S., Congress could enshrine consumers’ rights to control their own personal data, as the EU already has. It could also create a data protection enforcement agency, as essentially every other developed nation has.

Governments worldwide could invest in Public AI—models built by public agencies offered universally for public benefit and transparently under public oversight. They could also restrict how corporations can collude to exploit people using AI, for example by barring advertisements for dangerous products such as cigarettes and requiring disclosure of paid endorsements.

Every technology company seeks to differentiate itself from competitors, particularly in an era when yesterday’s groundbreaking AI quickly becomes a commodity that will run on any kid’s phone. One differentiator is in building a trustworthy service. It remains to be seen whether companies such as OpenAI and Anthropic can sustain profitable businesses on the back of subscription AI services like the premium editions of ChatGPT, Plus and Pro, and Claude Pro. If they are going to continue convincing consumers and businesses to pay for these premium services, they will need to build trust.

That will require making real commitments to consumers on transparency, privacy, reliability and security that are followed through consistently and verifiably.

And while no one knows what the future business models for AI will be, we can be certain that consumers do not want to be exploited by AI, secretly or otherwise.

This essay was written with Nathan E. Sanders, and originally appeared in The Conversation.

I’m a gay man in my fifties, comfortable in my skin, but I suffered severe bullying throughout school, which was often abetted by teachers. A recent class reunion prompted me to write a tell-all letter to the current school director regarding that trauma. His gracious response was incredibly healing. My family has accepted me since I came … Read More »

The post The Parent Trap appeared first on Dan Savage.

What is the polite way to ask someone to clean their teeth and deal with their neglected oral hygiene? A man’s girlfriend wants him to ignore her sexually, and focus exclusively on himself. But he wants to give her pleasure. How can they reconcile this? On the Magnum, have humans always been kinky? If the … Read More »

The post A Thong is Always a Thong appeared first on Dan Savage.

| Sun | Mon | Tue | Wed | Thu | Fri | Sat |

|---|---|---|---|---|---|---|

|

1

|

2

|

3

|

||||

|

4

|

5

|

6

|

7

|

8

|

9

|

10

|

|

11

|

12

|

13 |

14

|

15

|

16

|

17

|

|

18

|

19

|

20

|

21

|

22

|

23

|

24

|

|

25

|

26

|

27

|

28

|

29

|

30

|